Xue Bin (Jason) Peng

|

I'm an Assistant Professor at Simon Fraser University (SFU) and a Research Scientist at NVIDIA. I received a Ph.D. from UC Berkeley, advised by Professor Sergey Levine and Professor Pieter Abbeel. Prior to that, I received an M.Sc from the University of British Columbia, advised by Professor Michiel van de Panne. My work lies in the intersection between computer graphics and machine learning, with a focus on reinforcement learning for motion control of simulated characters. I have previously worked for Sony, Google Brain, OpenAI, Adobe Research, Disney Research, Microsoft (343 Industries), and Capcom. |

Prospective students: Information is available here.

Codebase

|

MimicKit A framework with implementations of our motion imitation methods in one convenient place. [Code] |

Publications

— 2025 —

|

Physics-Based Motion Imitation with Adversarial Differential Discriminators Ziyu Zhang, Sergey Bashkirov, Dun Yang, Yi Shi, Michael Taylor, Xue Bin Peng ACM SIGGRAPH Asia 2025 [Project page] [Paper] |

|

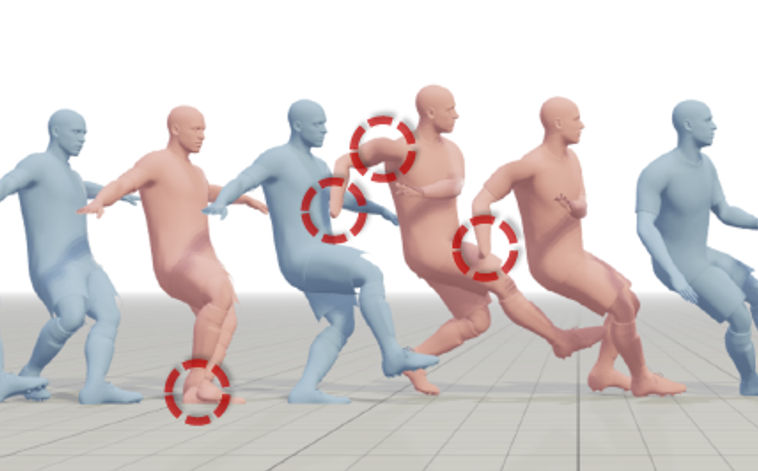

StableMotion: Training Motion Cleanup Models with Unpaired Corrupted Data Yuxuan Mu, Hung Yu Ling, Yi Shi, Ismael Baira Ojeda, Pengcheng Xi, Chang Shu, Fabio Zinno, Xue Bin Peng ACM SIGGRAPH Asia 2025 [Project page] [Paper] |

|

TWIST: Teleoperated Whole-Body Imitation System Yanjie Ze, Zixuan Chen, João Pedro Araújo, Zi-ang Cao, Xue Bin Peng, Jiajun Wu, C. Karen Liu Conference on Robot Learning (CoRL 2025) [Project page] [Paper] |

|

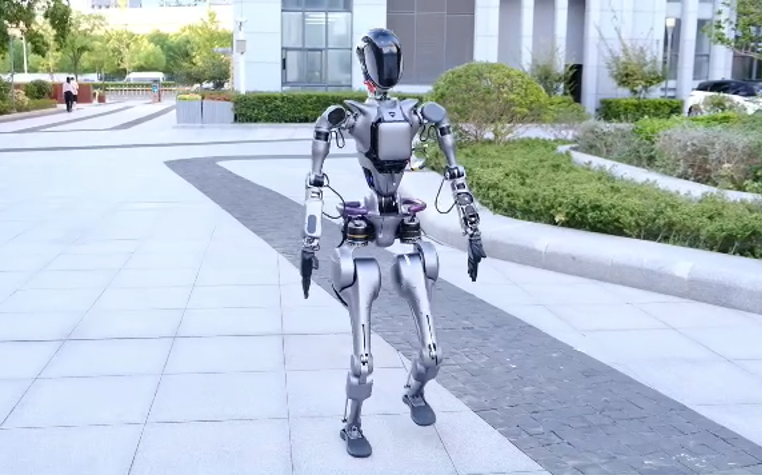

Learning Smooth Humanoid Locomotion through Lipschitz-Constrained Policies Zixuan Chen, Xialin He, Yen-Jen Wang, Qiayuan Liao, Yanjie Ze, Zhongyu Li, S. Shankar Sastry, Jiajun Wu, Koushil Sreenath, Saurabh Gupta, Xue Bin Peng International Conference on Intelligent Robots and Systems (IROS 2025) [Project page] [Paper] |

|

PARC: Physics-based Augmentation with Reinforcement Learning for Character Controllers Michael Xu, Yi Shi, KangKang Yin, Xue Bin Peng ACM SIGGRAPH 2025 [Project page] [Paper] |

|

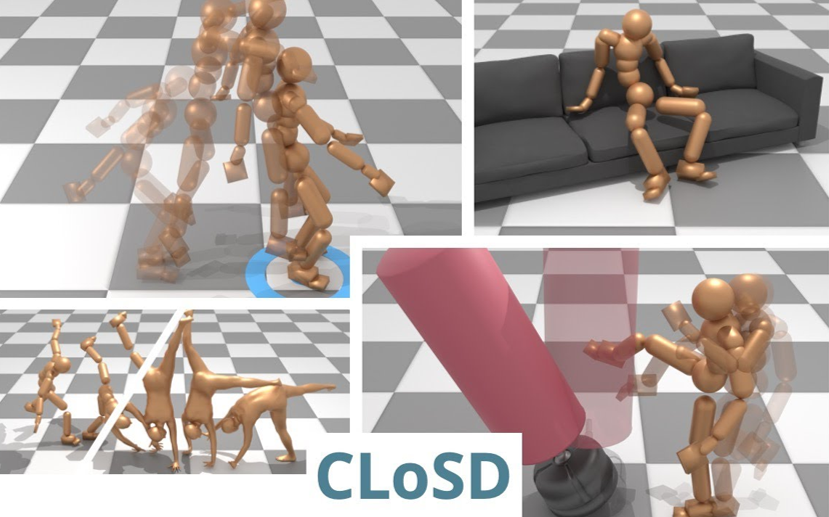

CLoSD: Closing the Loop Between Simulation and Diffusion for Multi-Task Character Control Guy Tevet, Sigal Raab, Setareh Cohan, Daniele Reda, Zhengyi Luo, Xue Bin Peng, Amit H. Bermano, Michiel van de Panne International Conference on Learning Representations (ICLR 2025) Spotlight [Project page] [Paper] |

— 2024 —

|

Reinforcement Learning for Versatile, Dynamic, and Robust Bipedal Locomotion Control Zhongyu Li, Xue Bin Peng, Pieter Abbeel, Sergey Levine, Glen Berseth, Koushil Sreenath The International Journal of Robotics Research (IJRR 2024) [Project page] [Paper] |

|

HiLMa-Res: A General Hierarchical Framework via Residual RL for Combining Quadrupedal Locomotion and Manipulation Xiaoyu Huang, Qiayuan Liao, Yiming Ni, Zhongyu Li, Laura Smith, Sergey Levine, Xue Bin Peng, Koushil Sreenath International Conference on Intelligent Robots and Systems (IROS 2024) [Project page] [Paper] |

|

MaskedMimic: Unified Physics-Based Character Control Through Masked Motion Inpainting Chen Tessler, Yunrong Guo, Ofir Nabati, Gal Chechik, Xue Bin Peng ACM Transactions on Graphics (Proc. SIGGRAPH Asia 2024) [Project page] [Paper] |

|

Interactive Character Control with Auto-Regressive Motion Diffusion Models Yi Shi, Jingbo Wang, Xuekun Jiang, Bingkun Lin, Bo Dai, Xue Bin Peng ACM Transactions on Graphics (Proc. SIGGRAPH 2024) [Project page] [Paper] |

|

SuperPADL: Scaling Language-Directed Physics-Based Control with Progressive Supervised Distillation Jordan Juravsky, Yunrong Guo, Sanja Fidler, Xue Bin Peng ACM SIGGRAPH 2024 [Project page] [Paper] |

|

Flexible Motion In-betweening with Diffusion Models Setareh Cohan, Guy Tevet, Daniele Reda, Xue Bin Peng, Michiel van de Panne ACM SIGGRAPH 2024 [Project page] [Paper] |

|

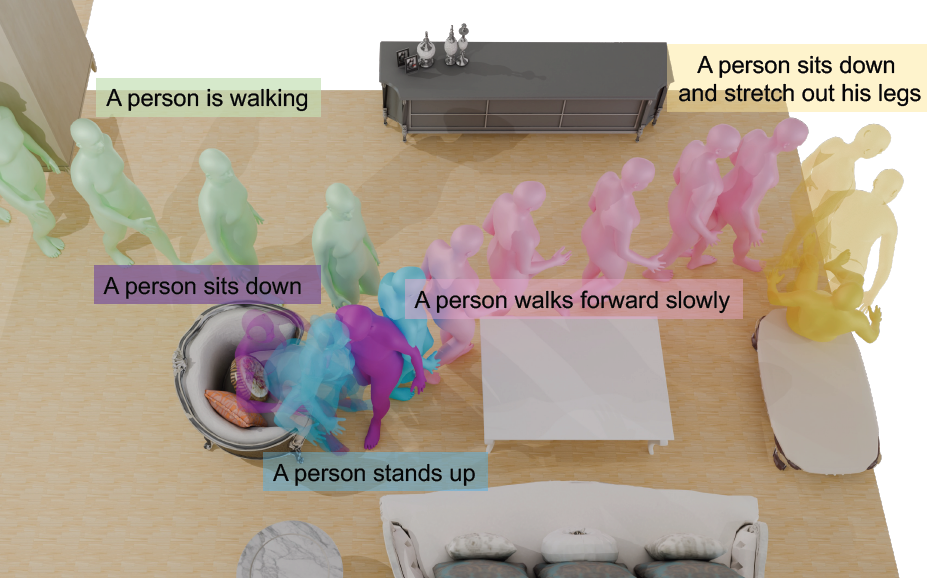

Generating Human Interaction Motions in Scenes with Text Control Hongwei Yi, Justus Thies, Michael J. Black, Xue Bin Peng, Davis Rempe European Conference on Computer Vision (ECCV 2024) [Project page] [Paper] |

|

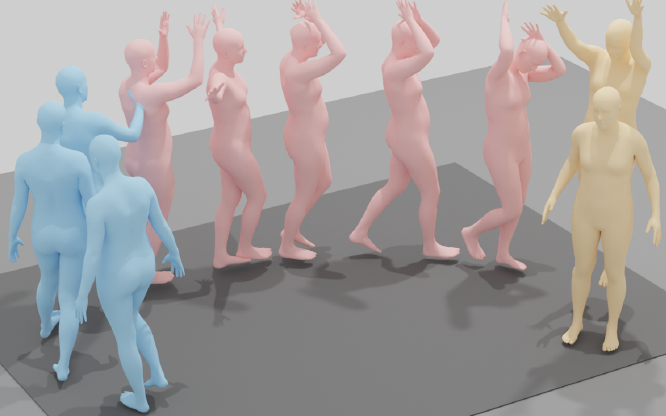

Multi-Track Timeline Control for Text-Driven 3D Human Motion Generation Mathis Petrovich, Or Litany, Umar Iqbal, Michael J. Black, Gul Varol, Xue Bin Peng, Davis Rempe CVPR Workshop on Human Motion Generation (CVPR Workshop 2024) [Project page] [Paper] |

|

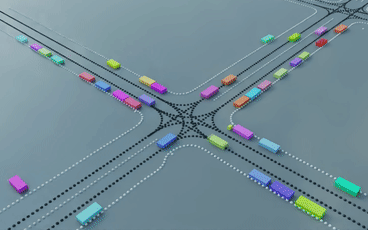

Trajeglish: Traffic Modeling as Next-Token Prediction Jonah Philion, Xue Bin Peng, Sanja Fidler International Conference on Learning Representations (ICLR 2024) [Project page] [Paper] |

— 2023 —

|

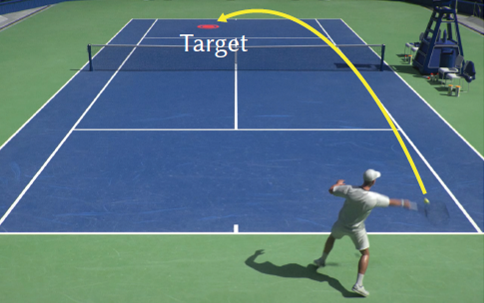

Learning Physically Simulated Tennis Skills from Broadcast Videos Haotian Zhang, Ye Yuan, Viktor Makoviychuk, Yunrong Guo, Sanja Fidler, Xue Bin Peng, Kayvon Fatahalian ACM Transactions on Graphics (Proc. SIGGRAPH 2023) Best Paper Honourable Mention [Project page] [Paper] |

|

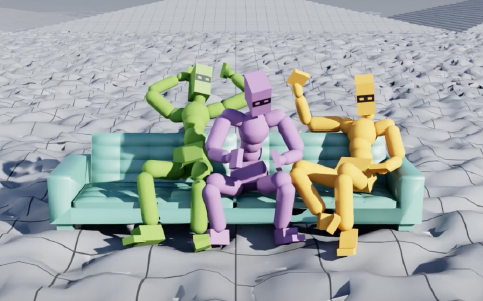

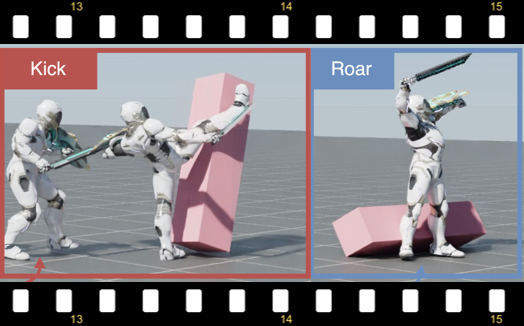

Synthesizing Physical Character-Scene Interactions Mohamed Hassan, Yunrong Guo, Tingwu Wang, Michael Black, Sanja Fidler, Xue Bin Peng ACM SIGGRAPH 2023 [Project page] [Paper] |

|

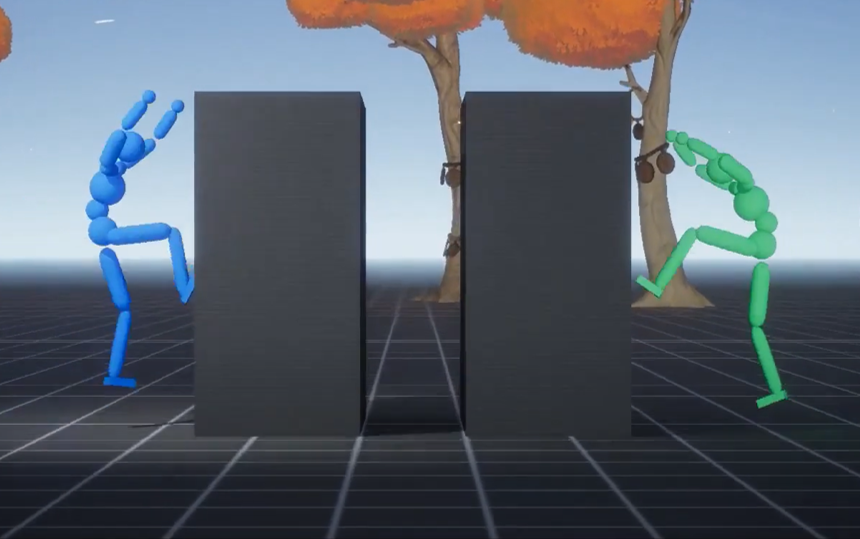

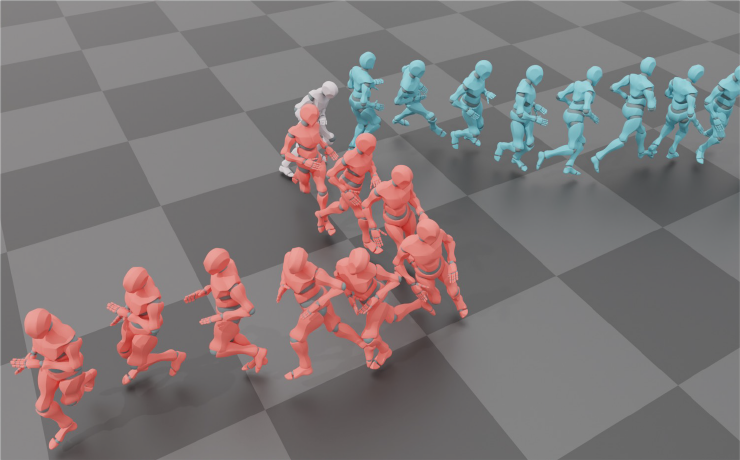

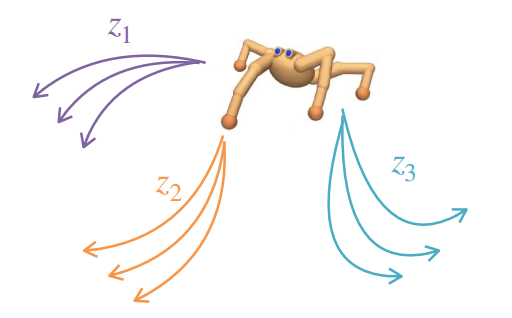

CALM: Conditional Adversarial Latent Models for Directable Virtual Characters Chen Tessler, Yoni Kasten, Yunrong Guo, Shie Mannor, Gal Chechik, Xue Bin Peng ACM SIGGRAPH 2023 [Project page] [Paper] |

|

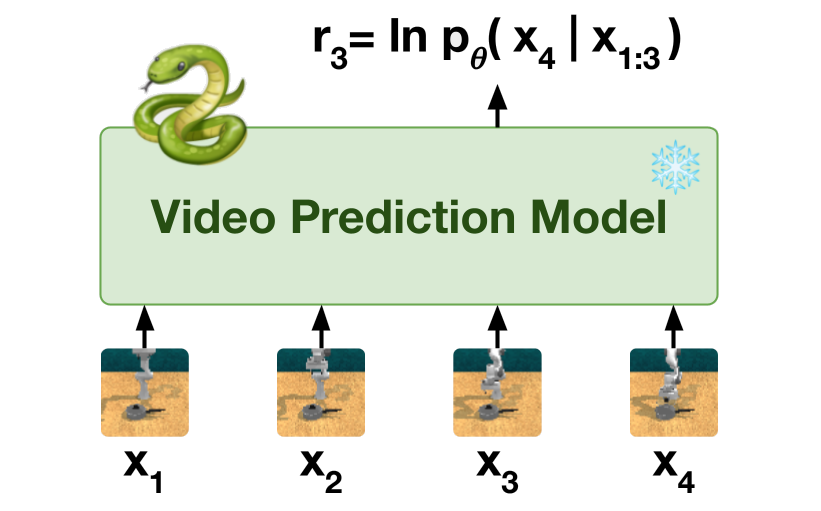

Video Prediction Models as Rewards for Reinforcement Learning Alejandro Escontrela, Ademi Adeniji, Wilson Yan, Ajay Jain, Xue Bin Peng, Ken Goldberg, Youngwoon Lee, Danijar Hafner, Pieter Abbeel Neural Information Processing Systems (NeurIPS 2023) [Project page] [Paper] |

|

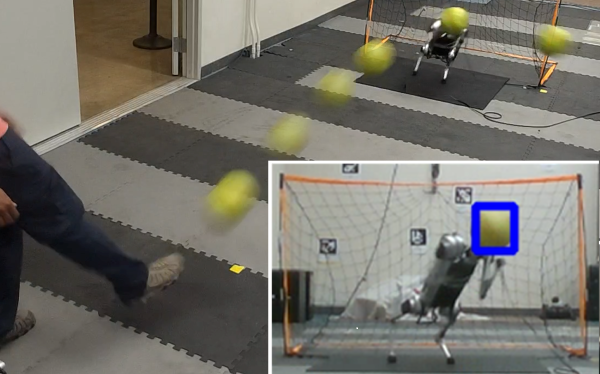

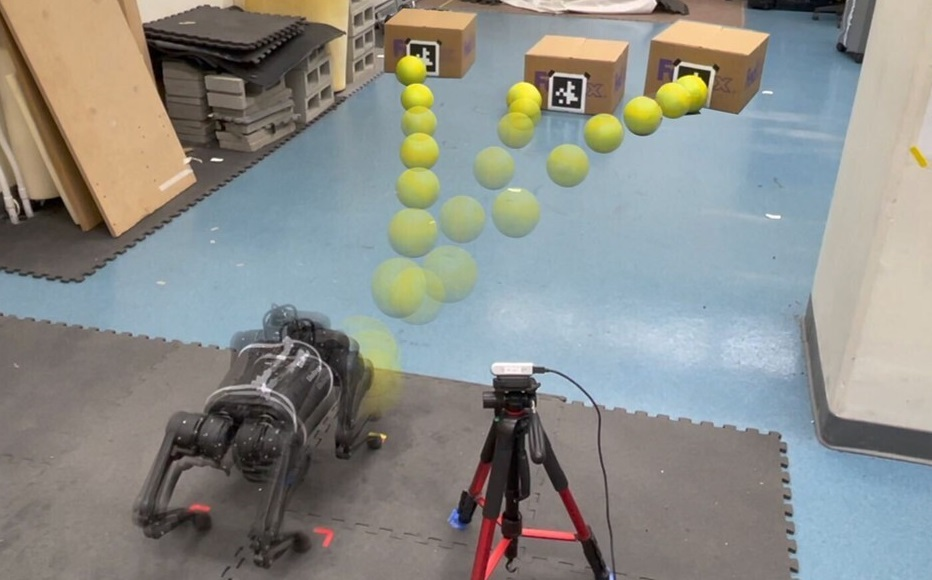

Creating a Dynamic Quadrupedal Robotic Goalkeeper with Reinforcement Learning Xiaoyu Huang, Zhongyu Li, Yanzhen Xiang, Yiming Ni, Yufeng Chi, Yunhao Li, Lizhi Yang, Xue Bin Peng, and Koushil Sreenath IEEE International Conference on Intelligent Robots and Systems (IROS 2023) [Project page] [Paper] |

|

Learning and Adapting Agile Locomotion Skills by Transferring Experience Laura Smith, J. Chase Kew, Tianyu Li, Linda Luu, Xue Bin Peng, Sehoon Ha, Jie Tan, Sergey Levine Robotics: Science and Systems (RSS 2023) [Project page] [Paper] |

|

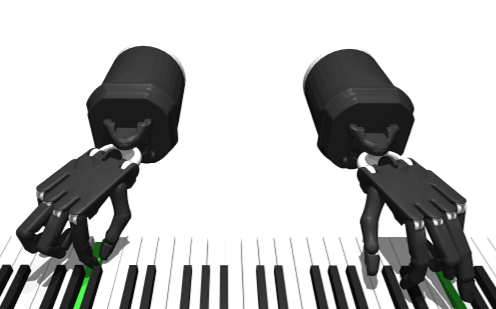

RoboPianist: Dexterous Piano Playing with Deep Reinforcement Learning Kevin Zakka, Philipp Wu, Laura Smith, Nimrod Gileadi, Taylor Howell, Xue Bin Peng, Sumeet Singh, Yuval Tassa, Pete Florence, Andy Zeng, Pieter Abbeel Conference on Robot Learning (CoRL 2023) [Project page] [Paper] |

|

Trace and Pace: Controllable Pedestrian Animation via Guided Trajectory Diffusion Davis Rempe, Zhengyi Luo, Xue Bin Peng, Ye Yuan, Kris Kitani, Karsten Kreis, Sanja Fidler, Or Litany Conference on Computer Vision and Pattern Recognition (CVPR 2023) [Project page] [Paper] |

|

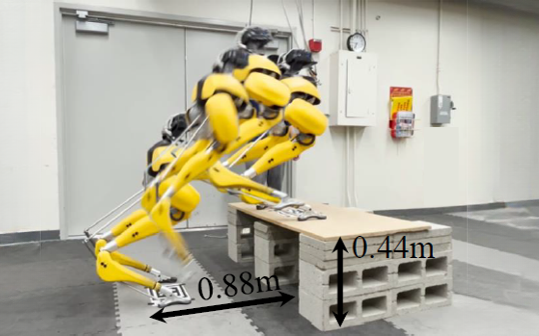

Robust and Versatile Bipedal Jumping Control through Reinforcement Learning Zhongyu Li, Xue Bin Peng, Pieter Abbeel, Sergey Levine, Glen Berseth, Koushil Sreenath Robotics: Science and Systems (RSS 2023) [Project page] [Paper] |

|

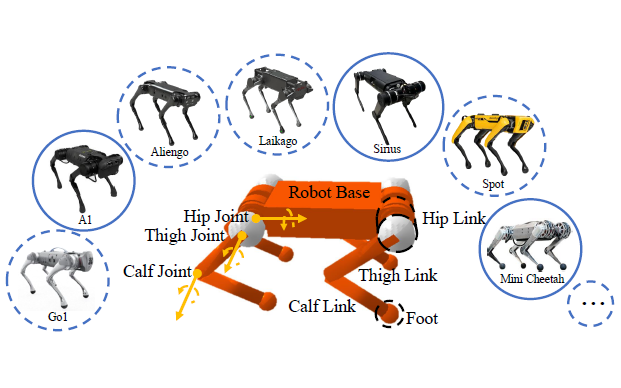

GenLoco: Generalized Locomotion Controllers for Quadrupedal Robots Gilbert Feng, Hongbo Zhang, Zhongyu Li, Xue Bin Peng, Bhuvan Basireddy, Linzhu Yue, Zhitao Song, Lizhi Yang, Yunhui Liu, Koushil Sreenath, Sergey Levine Conference on Robot Learning (CoRL 2023) [Project page] [Paper] |

— 2022 —

|

Unsupervised Reinforcement Learning with Contrastive Intrinsic Control Michael Laskin, Hao Liu, Xue Bin Peng, Denis Yarats, Aravind Rajeswaran, Pieter Abbeel Neural Information Processing Systems (NeurIPS 2022) [Project page] [Paper] |

|

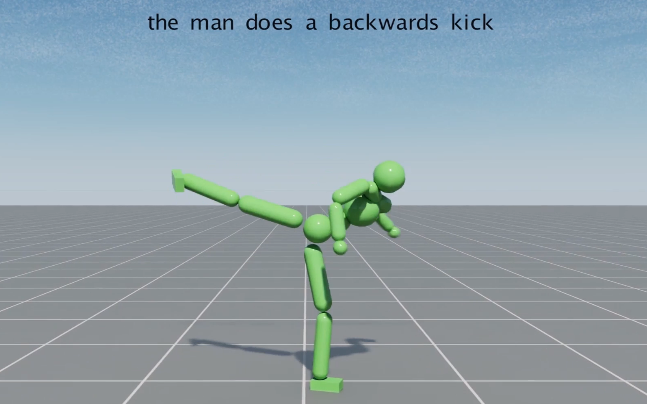

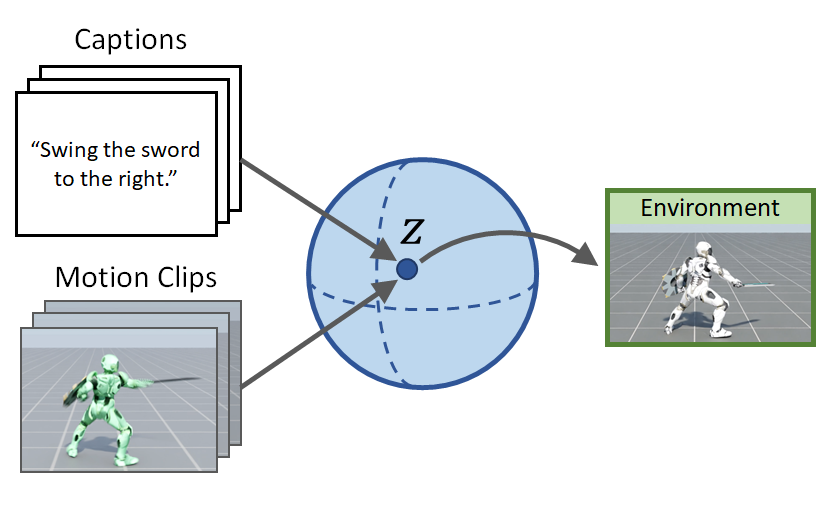

PADL: Language-Directed Physics-Based Character Control Jordan Juravsky, Yunrong Guo, Sanja Fidler, Xue Bin Peng ACM SIGGRAPH Asia 2022 [Project page] [Paper] |

|

Adversarial Motion Priors Make Good Substitutes for Complex Reward Functions Alejandro Escontrela, Xue Bin Peng, Wenhao Yu, Tingnan Zhang, Atil Iscen, Ken Goldberg, Pieter Abbeel IEEE International Conference on Intelligent Robots and Systems (IROS 2022) [Project page] [Paper] |

|

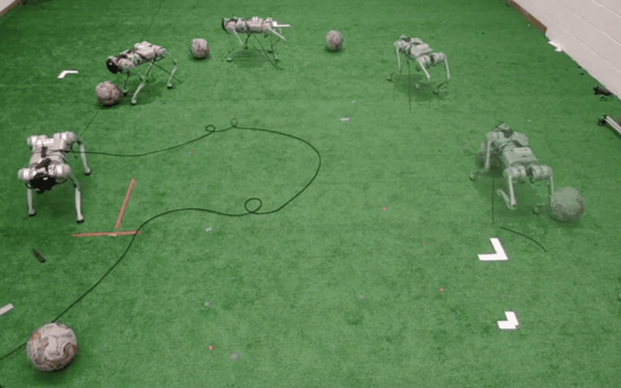

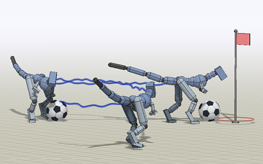

Hierarchical Reinforcement Learning for Precise Soccer Shooting Skills using a Quadrupedal Robot Yandong Ji, Zhongyu Li, Yinan Sun, Xue Bin Peng, Sergey Levine, Glen Berseth, Koushil Sreenath IEEE International Conference on Intelligent Robots and Systems (IROS 2022) [Project page] [Paper] |

|

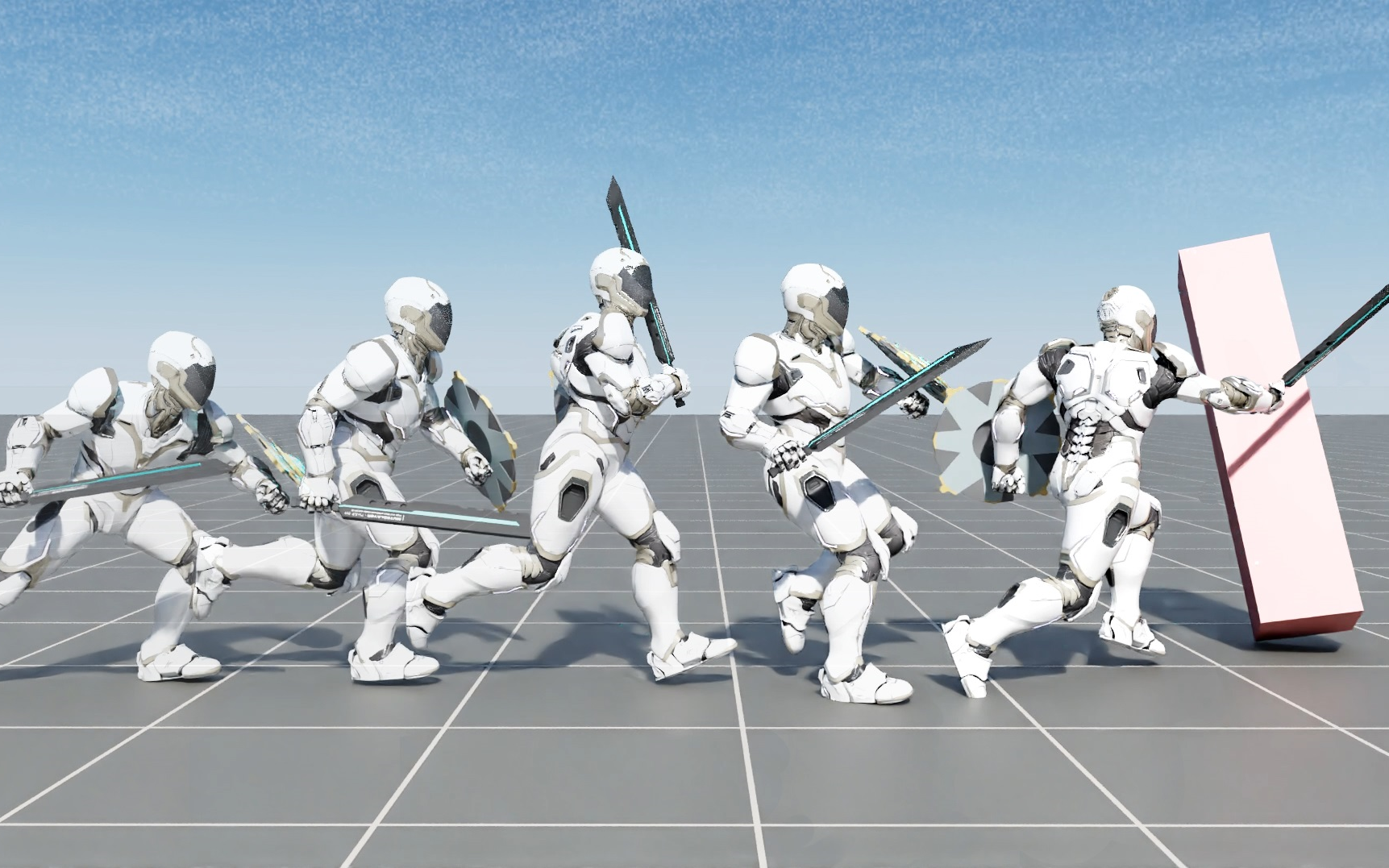

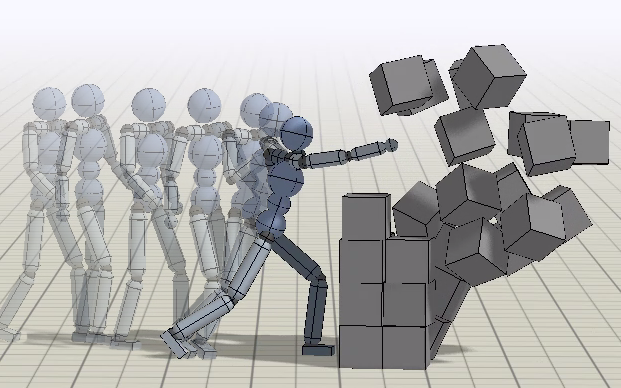

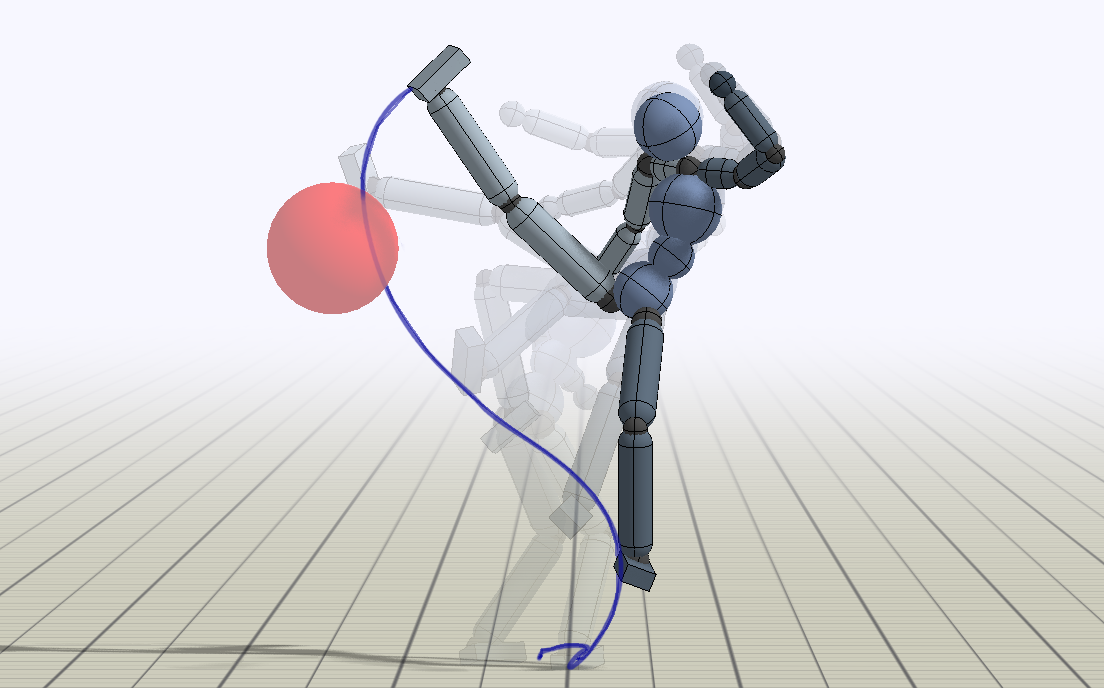

ASE: Large-Scale Reusable Adversarial Skill Embeddings for Physically Simulated Characters Xue Bin Peng, Yunrong Guo, Lina Halper, Sergey Levine, Sanja Fidler ACM Transactions on Graphics (Proc. SIGGRAPH 2022) [Project page] [Paper] |

|

Legged Robots that Keep on Learning: Fine-Tuning Locomotion Policies in the Real World Laura Smith, J. Chase Kew, Xue Bin Peng, Sehoon Ha, Jie Tan, Sergey Levine IEEE International Conference on Robotics and Automation (ICRA 2022) [Project page] [Paper] |

— 2021 —

|

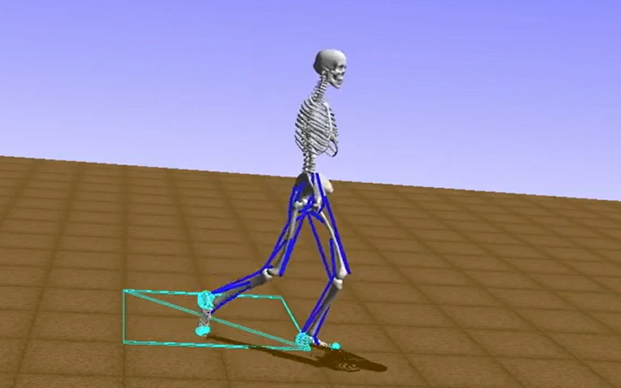

Deep Reinforcement Learning for Modeling Human Locomotion Control in Neuromechanical Simulation Seungmoon Song, Łukasz Kidziński, Xue Bin Peng, Carmichael Ong, Jennifer Hicks, Sergey Levine, Christopher G. Atkeson, Scott L. Delp Journal of NeuroEngineering and Rehabilitation 2021 [Project page] [Paper] |

|

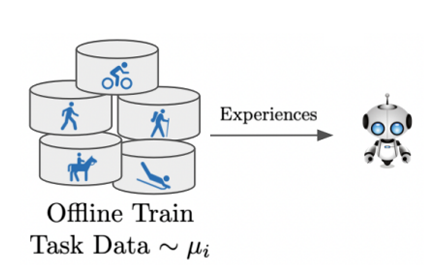

Offline Meta-Reinforcement Learning with Advantage Weighting Eric Mitchell, Rafael Rafailov, Xue Bin Peng, Sergey Levine, Chelsea Finn International Conference on Machine Learning (ICML 2021) [Project page] [Paper] |

|

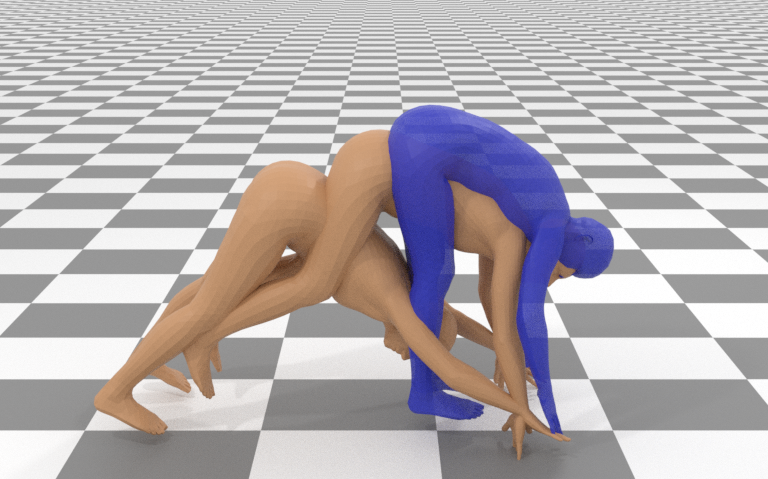

AMP: Adversarial Motion Priors for Stylized Physics-Based Character Control Xue Bin Peng, Ze Ma, Pieter Abbeel, Sergey Levine, Angjoo Kanazawa ACM Transactions on Graphics (Proc. SIGGRAPH 2021) [Project page] [Paper] |

|

Reinforcement Learning for Robust Parameterized Locomotion Control of Bipedal Robots Zhongyu Li, Xuxin Cheng, Xue Bin Peng, Pieter Abbeel, Sergey Levine, Glen Berseth, Koushil Sreenath IEEE International Conference on Robotics and Automation (ICRA 2021) [Project page] [Paper] |

— 2020 —

|

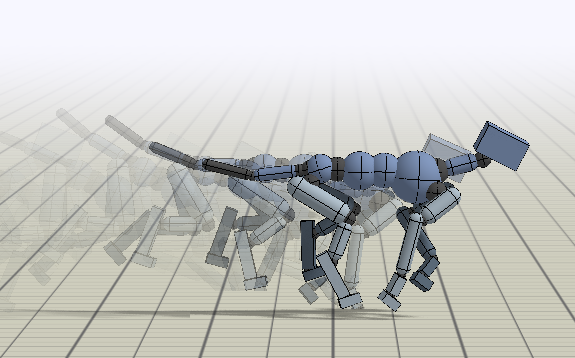

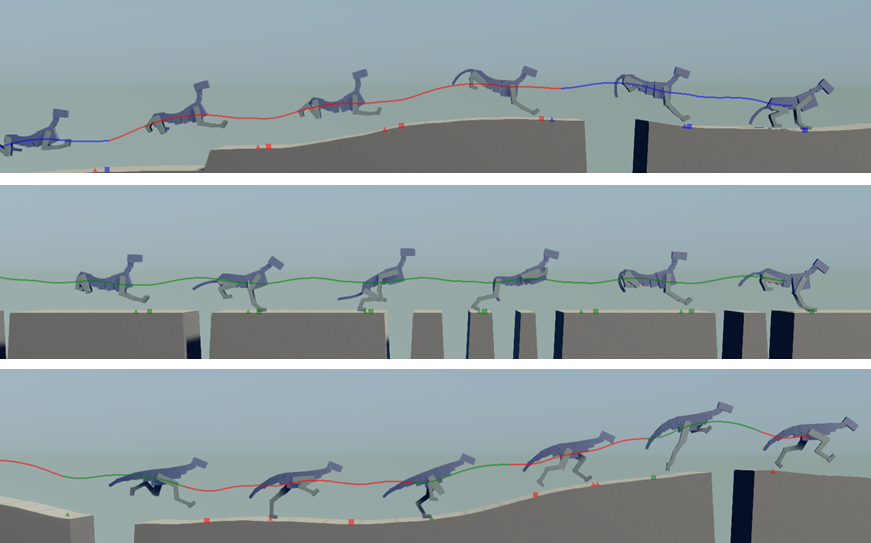

Learning Agile Robotic Locomotion Skills by Imitating Animals Xue Bin Peng, Erwin Coumans, Tingnan Zhang, Tsang-Wei Edward Lee, Jie Tan, Sergey Levine Robotics: Science and Systems (RSS 2020) Best Paper Award [Project page] [Paper] |

|

Reinforcement Learning with Competitive Ensembles of Information-Constrained Primitives Anirudh Goyal, Shagun Sodhani, Jonathan Binas, Xue Bin Peng, Sergey Levine, Yoshua Bengio International Conference on Learning Representations (ICLR 2020) [Project page] [Paper] |

— 2019 —

|

Reward-Conditioned Policies Aviral Kumar, Xue Bin Peng, Sergey Levine arXiv Preprint 2019 [Project page] [Paper] |

|

On Learning Symmetric Locomotion Farzad Adbolhosseini, Hung Yu Ling, Zhaoming Xie, Xue Bin Peng, Michiel van de Panne ACM SIGGRAPH Conference on Motion, Interaction, and Games (MIG 2019) [Project page] [Paper] |

|

Advantage-Weighted Regression: Simple and Scalable Off-Policy Reinforcement Learning Xue Bin Peng, Aviral Kumar, Grace Zhang, Sergey Levine arXiv Preprint 2019 [Project page] [Paper] |

|

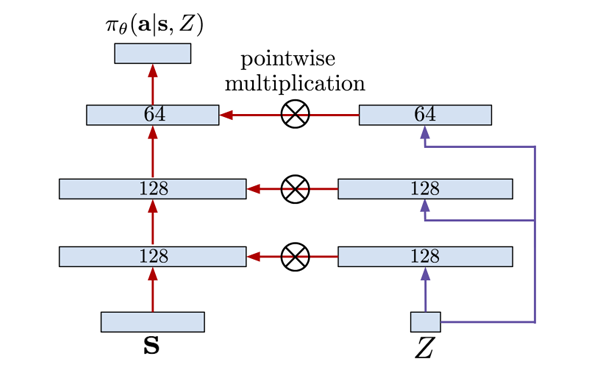

MCP: Learning Composable Hierarchical Control with Multiplicative Compositional Policies Xue Bin Peng, Michael Chang, Grace Zhang, Pieter Abbeel, Sergey Levine Neural Information Processing Systems (NeurIPS 2019) [Project page] [Paper] |

|

Variational Discriminator Bottleneck: Improving Imitation Learning, Inverse RL, and GANs by Constraining Information Flow Xue Bin Peng, Angjoo Kanazawa, Sam Toyer, Pieter Abbeel, Sergey Levine International Conference on Learning Representations (ICLR 2019) [Project page] [Paper] |

— 2018 —

|

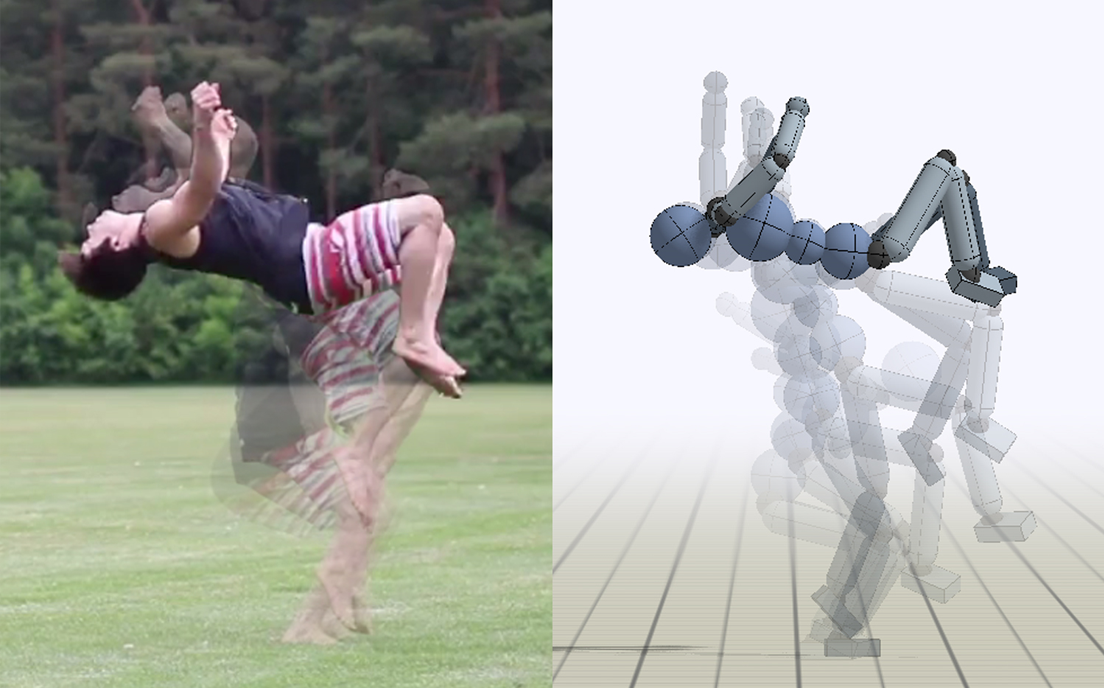

SFV: Reinforcement Learning of Physical Skills from Videos Xue Bin Peng, Angjoo Kanazawa, Jitendra Malik, Pieter Abbeel, Sergey Levine ACM Transactions on Graphics (Proc. SIGGRAPH Asia 2018) [Project page] [Paper] |

|

DeepMimic: Example-Guided Deep Reinforcement Learning of Physics-Based Character Skills Xue Bin Peng, Pieter Abbeel, Sergey Levine, Michiel van de Panne ACM Transactions on Graphics (Proc. SIGGRAPH 2018) [Project page] [Paper] |

|

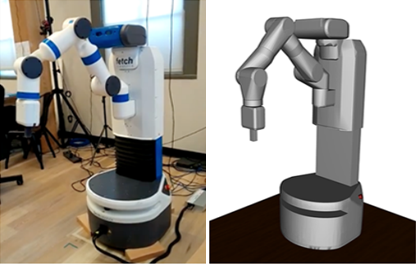

Sim-to-Real Transfer of Robotic Control with Dynamics Randomization Xue Bin Peng, Marcin Andrychowicz, Wojciech Zaremba, Pieter Abbeel IEEE International Conference on Robotics and Automation (ICRA 2018) [Project page] [Paper] |

— 2017 —

|

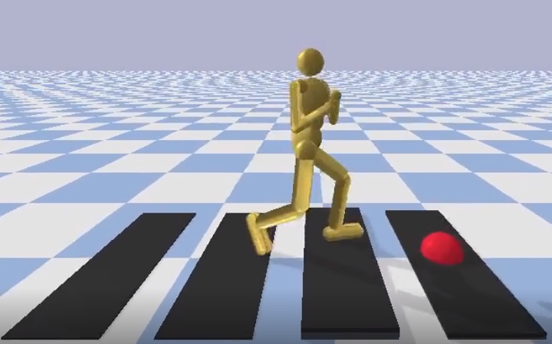

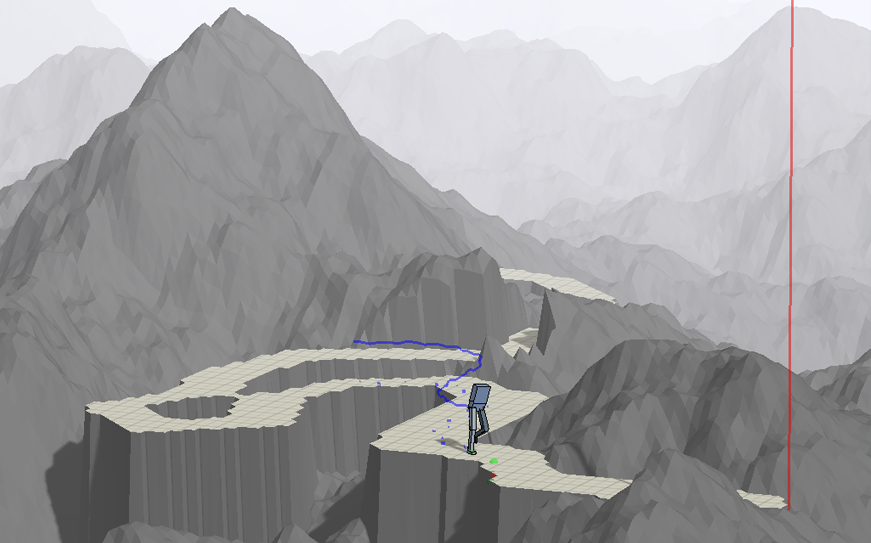

DeepLoco: Developing Locomotion Skills Using Hierarchical Deep Reinforcement Learning Xue Bin Peng, Glen Berseth, KangKang Yin, Michiel van de Panne ACM Transactions on Graphics (Proc. SIGGRAPH 2017) [Project page] [Paper] |

|

Learning Locomotion Skills Using DeepRL: Does the Choice of Action Space Matter? Xue Bin Peng, Michiel van de Panne ACM SIGGRAPH / Eurographics Symposium on Computer Animation 2017 Best Student Paper Award [Project page] [Paper] |

— 2016 —

|

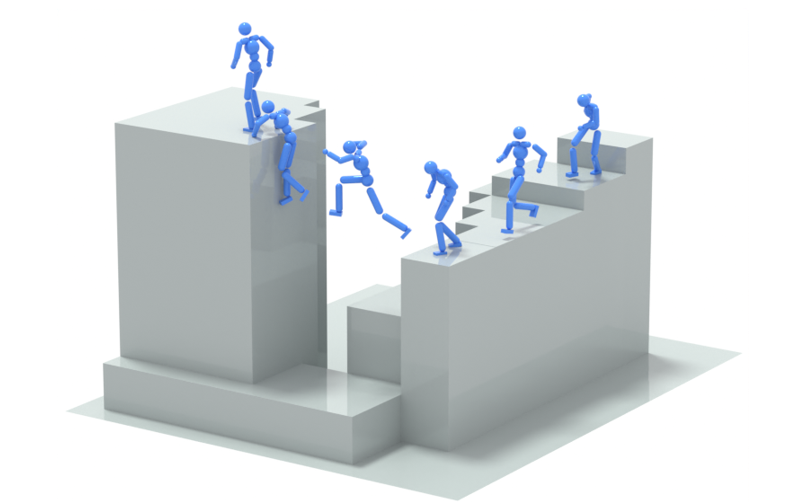

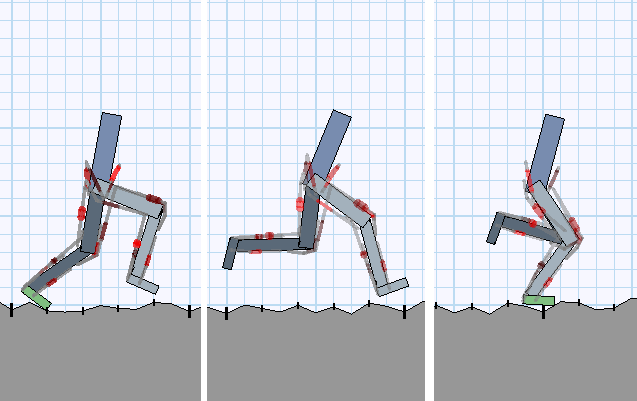

Terrain-Adaptive Locomotion Skills Using Deep Reinforcement Learning Xue Bin Peng, Glen Berseth, Michiel van de Panne ACM Transactions on Graphics (Proc. SIGGRAPH 2016) [Project page] [Paper] |

— 2015 —

|

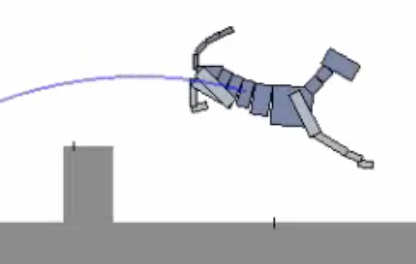

Dynamic Terrain Traversal Skills Using Reinforcement Learning Xue Bin Peng, Glen Berseth, Michiel van de Panne ACM Transactions on Graphics (Proc. SIGGRAPH 2015) [Project page] [Paper] |

Thesis

|

Ph.D. Thesis Acquiring Motor Skills Through Motion Imitation and Reinforcement Learning University of California, Berkeley 2021 [Project page] [Thesis] |

|

M.Sc. Thesis Developing Locomotion Skills with Deep Reinforcement Learning University of British Columbia 2017 [Project page] [Thesis] |