Learning Physically Simulated Tennis Skills from Broadcast Videos

Best Paper Honourable Mention

Abstract

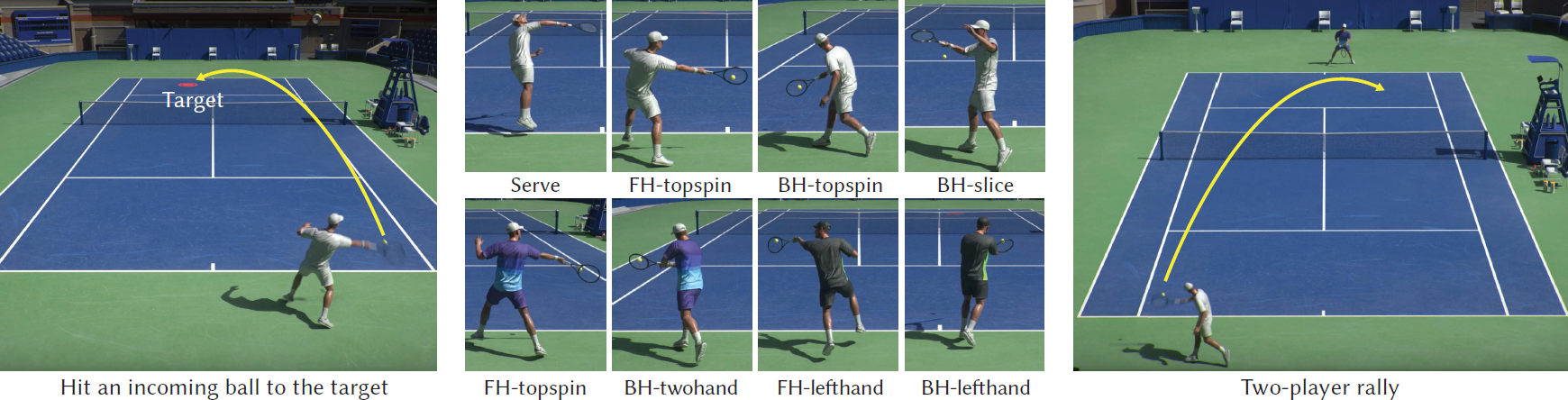

We present a system that learns diverse, physically simulated tennis skills from large-scale demonstrations of tennis play harvested from broadcast videos. Our approach is built upon hierarchical models, combining a low-level imitation policy and a high-level motion planning policy to steer the character in a motion embedding learned from broadcast videos. When deployed at scale on large video collections that encompass a vast set of examples of real-world tennis play, our approach can learn complex tennis shotmaking skills and realistically chain together multiple shots into extended rallies, using only simple rewards and without explicit annotations of stroke types. To address the low quality of motions extracted from broadcast videos, we correct estimated motion with physics-based imitation, and use a hybrid control policy that overrides erroneous aspects of the learned motion embedding with corrections predicted by the high-level policy. We demonstrate that our system produces controllers for physically-simulated tennis players that can hit the incoming ball to target positions accurately using a diverse array of strokes (serves, forehands, and backhands), spins (topspins and slices), and playing styles (one/two-handed backhands, left/right-handed play). Overall, our system can synthesize two physically simulated characters playing extended tennis rallies with simulated racket and ball dynamics.Paper: [PDF] Code: [GitHub] Webpage: [Link]

Videos

Bibtex

@article{

zhang2023vid2player3d,

author = {Zhang, Haotian and Yuan, Ye and Makoviychuk, Viktor and Guo, Yunrong and Fidler, Sanja and Peng, Xue Bin and Fatahalian, Kayvon},

title = {Learning Physically Simulated Tennis Skills from Broadcast Videos},

year = {2023},

issue_date = {August 2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {42},

number = {4},

issn = {0730-0301},

url = {https://doi.org/10.1145/3592408},

doi = {10.1145/3592408},

journal = {ACM Trans. Graph.},

month = {jul},

articleno = {95},

numpages = {14},

keywords = {imitation learning, physics-based character animation, reinforcement learning}

}